Lidar's Role in Autonomous Navigation

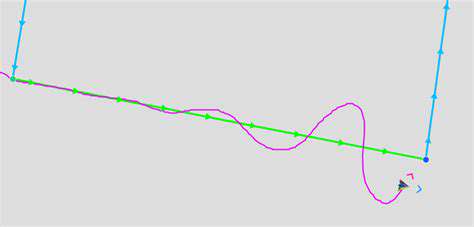

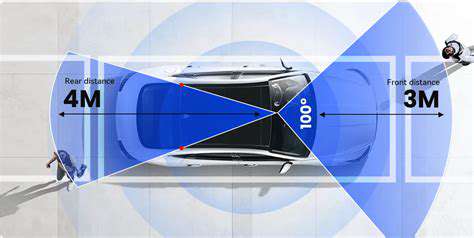

Modern autonomous navigation systems increasingly depend on Lidar (Light Detection and Ranging) technology to generate high-definition 3D environmental maps. This spatial mapping capability proves indispensable for precise vehicle positioning, obstacle identification, and route optimization. By calculating laser pulse reflection times, these sensors measure object distances and contours with remarkable accuracy, constructing environmental models that form the backbone of reliable self-driving systems.

What sets Lidar apart is its real-time discrimination between stationary and moving objects. This dynamic interpretation capability allows autonomous vehicles to comprehend road layouts, pedestrian movements, and surrounding traffic patterns - essential factors for safe navigation through complex urban environments.

Advantages of Lidar in Autonomous Systems

The technology's primary strength lies in producing exceptionally detailed 3D point clouds. These datasets enable centimeter-level precision in vehicle localization across diverse settings, from crowded city intersections to poorly marked rural roads. Such granular environmental understanding empowers autonomous systems to make context-aware decisions and adapt to sudden roadway changes.

Unlike optical sensors, Lidar maintains consistent performance during nighttime operation and inclement weather. While camera systems falter in fog or heavy precipitation, Lidar's laser-based measurements remain unaffected - a critical advantage for ensuring uninterrupted autonomous operation across various climatic conditions.

Lidar's Limitations and Challenges

Despite its technological merits, Lidar implementation faces practical hurdles. Production costs for high-performance units remain substantial, primarily due to complex optical components and precision manufacturing requirements. This economic factor continues to influence adoption rates, particularly for consumer vehicle applications.

Environmental factors can occasionally interfere with measurement accuracy. Dense vegetation or intense sunlight may require advanced signal processing algorithms to maintain reliable performance. Current research initiatives focus on developing more resilient systems capable of handling these environmental variables more effectively.

Comparing Lidar with Other Sensors

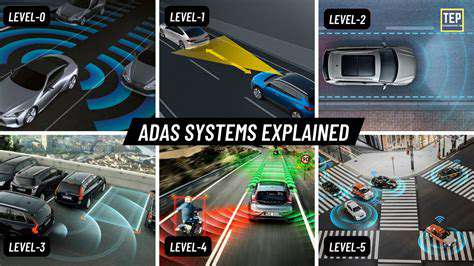

When evaluating sensor technologies, each option presents distinct advantages. Traditional cameras provide rich visual data but struggle with depth perception and variable lighting. Radar systems offer reliable distance measurement but lack the spatial resolution of Lidar.

The most effective autonomous systems employ sensor fusion strategies, combining Lidar with complementary technologies. This multi-sensor approach creates comprehensive environmental models by leveraging each technology's strengths while mitigating individual limitations. The resulting data synthesis significantly enhances system reliability and safety margins.

Platform selection remains a critical factor when establishing any technology-dependent business. The ideal solution should align with operational requirements, target demographics, and technical competencies. Evaluation criteria should include system scalability, user experience, integration potential, and vendor support structures. Small-scale operations might prioritize simplicity, while enterprise implementations often demand advanced functionality and customization options.

The Synergy of Sensor Fusion

Sensor Fusion: The Core Concept

At the foundation of autonomous perception systems lies sensor fusion - the sophisticated integration of multiple sensor data streams. This methodology transcends simple data aggregation, employing advanced algorithms to synthesize coherent environmental models. The process addresses inherent sensor limitations regarding detection range, measurement accuracy, and environmental susceptibility - producing the comprehensive situational awareness required for safe autonomous operation.

The true value emerges from the system's ability to contextualize sensor inputs, enabling vehicles to interpret object dynamics, predict trajectories, and anticipate potential interactions. This predictive capability forms the cognitive foundation for autonomous decision-making in complex traffic scenarios.

LiDAR: Laser-Based Precision

LiDAR systems generate millimeter-accurate 3D environmental representations through laser scanning. The resulting point clouds provide unparalleled detail about object geometry and spatial relationships. While cost remains a consideration, the technology's weather resilience and precision make it particularly valuable for navigating constrained urban environments and complex intersections.

Radar: Range and Speed Expertise

Radar technology specializes in long-range detection and velocity measurement. Its ability to track multiple objects simultaneously at highway distances proves invaluable for maintaining safe following intervals. The system's radio-wave operation ensures consistent performance across various weather conditions, complementing other sensor modalities effectively.

Camera: Vision and Contextual Understanding

Optical systems deliver the visual context that other sensors cannot - interpreting traffic signals, reading road signs, and recognizing lane markings. Their object classification capabilities enable nuanced understanding of roadway elements, from construction zones to emergency vehicles. The widespread availability and cost-effectiveness of camera technology make it a ubiquitous component in autonomous platforms.

Combining Sensor Data for Robustness

The fusion process creates perceptual systems greater than the sum of their parts. LiDAR's spatial accuracy combines with radar's velocity data and camera's visual context to produce comprehensive environmental models. This multi-modal approach compensates for individual sensor weaknesses while amplifying their collective strengths - resulting in perception systems with exceptional reliability and safety margins.

Challenges and Future Directions

Implementation challenges persist in computational requirements and environmental adaptability. Developing efficient algorithms for real-time data fusion demands significant processing power. Future advancements will focus on optimizing these algorithms while enhancing system resilience against sensor conflicts or environmental interference - paving the way for next-generation autonomous platforms.