Addressing the Ethical Considerations

Ethical Considerations in AI Development

Artificial intelligence (AI) is rapidly transforming various aspects of our lives, from healthcare to finance. However, the development and deployment of AI systems raise profound ethical concerns that must be carefully addressed. These concerns encompass issues ranging from algorithmic bias to the potential for misuse and the impact on human jobs. Understanding and proactively mitigating these ethical dilemmas is crucial for ensuring that AI benefits society as a whole, rather than exacerbating existing inequalities or creating new problems.

A key ethical challenge is the potential for AI systems to perpetuate and amplify existing societal biases. If the data used to train these systems reflects historical prejudices, the resulting AI models can inherit and reinforce those biases. This can lead to discriminatory outcomes in areas like loan applications, criminal justice, and even hiring processes. Addressing this requires careful consideration of data sources and the development of robust mechanisms to detect and mitigate bias in AI systems.

Bias and Fairness in AI Systems

One of the most significant ethical concerns surrounding AI is the potential for bias in AI systems. This bias often stems from the data used to train the models. If the data reflects existing societal prejudices or inequalities, the AI system will likely perpetuate and even amplify those biases. For example, if a loan application dataset predominantly shows loan defaults by a particular demographic group, an AI system trained on this data might unfairly deny loans to members of that group, even if they are creditworthy.

To mitigate this, developers must ensure that the training data is representative and diverse. They must also implement techniques for detecting and mitigating bias within the algorithms themselves. Ongoing monitoring and auditing of AI systems are crucial to identify and address any biases that may emerge over time. Transparency and accountability are essential components of this process, enabling stakeholders to understand how AI systems are making decisions and identifying potential areas for improvement or intervention.

Responsibility and Accountability

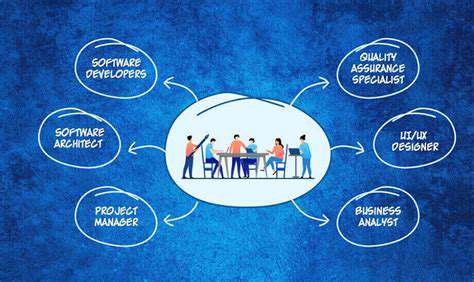

Determining responsibility and accountability for the actions of AI systems is a complex ethical issue. As AI systems become more sophisticated and autonomous, the lines of responsibility may blur between developers, users, and the systems themselves. If an AI system makes a harmful decision, who is held accountable? Addressing this requires establishing clear guidelines and regulations regarding the development, deployment, and use of AI systems. These guidelines should consider the potential consequences of AI actions and establish mechanisms for redress when harm occurs.

Furthermore, ensuring transparency in AI decision-making processes is vital. Understanding how AI systems arrive at their conclusions is crucial not only for accountability but also for building trust and fostering public acceptance. This transparency should extend to the data used, the algorithms employed, and the potential biases that may influence the outcomes. Open discussions and collaboration between stakeholders are essential to navigate these complexities and develop responsible AI practices.

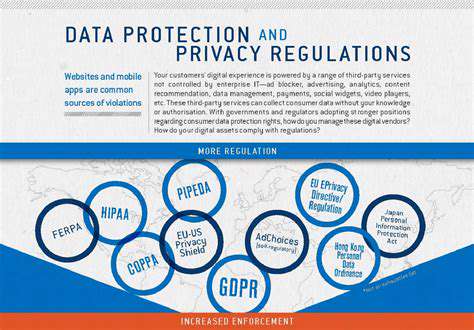

Privacy and Data Security

The use of AI often involves collecting and processing vast amounts of personal data. This raises significant privacy concerns, particularly regarding data security and the potential for misuse. Safeguarding personal data is paramount and necessitates robust security measures to prevent unauthorized access or breaches. Data anonymization techniques and stringent access controls are critical to protect individual privacy.

Protecting sensitive data and ensuring compliance with data privacy regulations is essential. Developers must implement strong security measures to prevent data breaches and unauthorized access. Furthermore, mechanisms must be in place to allow individuals to access, correct, and delete their personal data processed by AI systems. Establishing clear guidelines and regulations regarding data collection, processing, and usage is essential to build public trust and maintain responsible AI practices.