Data Volume Explosion

Modern society generates an unimaginable amount of information every single day. From the tweets we post to the purchases we make online, from factory sensor readings to complex scientific data collection, we're swimming in a sea of digital information. This phenomenon, often called the data tsunami, has completely overwhelmed conventional methods of storing and analyzing information. The relentless flood of new data demands creative solutions for organizing and making sense of it all. Managing these enormous datasets requires infrastructure that's not just bigger, but fundamentally different in its capabilities.

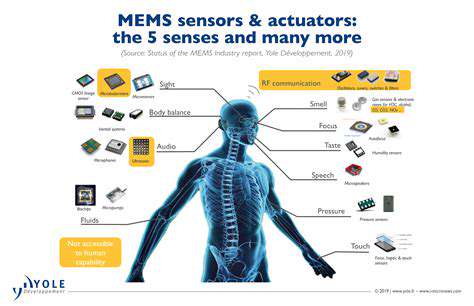

The growing diversity in how data gets created adds yet another complication to the already Herculean task of processing information. We're dealing with neatly organized database tables alongside messy documents, photos, and video files - each requiring different handling approaches. This variety highlights why we need adaptable, expandable solutions and specialized methods to uncover valuable insights from our digital ocean.

The Need for Enhanced Processing Power

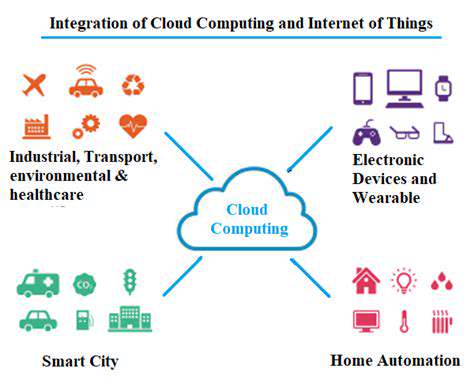

Standard computing setups simply can't cope with today's massive datasets and complex analytical needs. This reality has driven the creation of powerful new computing systems - cloud platforms and high-performance computing clusters that form the backbone of modern data analysis. These advanced systems aren't just helpful - they're absolutely essential for making sense of data quickly. The ability to process information instantly is what makes possible everything from catching credit card fraud to suggesting your next favorite product.

To truly benefit from these enormous datasets, we need specialized analytical approaches. Machine learning has become particularly crucial for spotting patterns, forecasting trends, and making data-backed decisions. Thanks to these processing power advancements, we can now analyze mountains of data and turn it into practical knowledge.

Addressing the Challenges

The challenge extends beyond just processing capability - we also need smarter ways to store and retrieve information. Building flexible, expandable data systems that can handle today's information explosion is absolutely critical. These systems must deliver fast access to needed information while ensuring efficient use of data resources.

We also can't ignore the ethical questions surrounding massive data collection. Protecting personal information and ensuring responsible data use requires strong safeguards. Clear data governance policies are necessary to follow regulations and maintain public trust in data-dependent systems.

Real-Time Data Analysis and Model Training

Real-Time Data Ingestion and Processing

Cloud platforms offer the perfect solution for handling the firehose of real-time data from automated systems. Whether it's sensor readings, performance metrics, or user activity streams, this data needs immediate processing to be useful. Cloud-based data pipelines and streaming services make near-instant data processing possible, keeping the most current information ready for analysis and decision-making. This real-time capability is what allows automated systems to respond quickly to changing conditions.

Transforming and cleaning data efficiently is a crucial step. Cloud tools automate these processes, fixing inconsistencies and filling gaps to ensure high-quality data for training models. This automation dramatically cuts down manual work and speeds up the iterative process of building better models - a key factor in developing reliable automated systems.

Model Training and Optimization in the Cloud

Training sophisticated machine learning models for automated systems demands serious computing muscle and time. Cloud platforms solve this by letting you scale resources up or down as needed, freeing you from the limitations of local hardware. This flexibility is especially valuable for resource-intensive tasks like deep learning model training.

Cloud environments also provide access to powerful GPUs and other specialized hardware, significantly speeding up model training. Researchers and developers can leverage cutting-edge technology without massive hardware investments. Plus, cloud platforms offer excellent tools for monitoring and refining model performance, helping ensure optimal real-world operation.

Deployment and Management of Autonomous Systems Models

After training, automated system models need secure, scalable deployment. Cloud computing plays a pivotal role here. Cloud deployment platforms allow smooth model integration into various automated systems, enabling real-time decision-making. This ensures model predictions align with overall system goals.

Cloud environments also excel at managing and updating models. Continuous monitoring and updates let automated systems adapt to new situations, learn from fresh data, and maintain peak performance. Cloud platforms handle these updates without disrupting system operation.

Additionally, cloud solutions provide secure storage for model parameters and data, with easy access and version control. This organized, protected storage is vital for maintaining automated system reliability. Cloud-based approaches keep critical data both accessible and safe, enabling effective management of automated systems in real applications.

Enhanced Safety and Reliability Through Remote Monitoring

Improving Equipment Health

Remote monitoring gives technicians real-time equipment performance data, enabling proactive maintenance. This game-changing capability spots potential problems before they become expensive failures. By analyzing temperature, vibration, and pressure readings, teams can predict equipment issues, schedule maintenance strategically, and minimize unplanned downtime. This forward-looking approach extends equipment life while reducing costly emergency repairs.

Catching anomalies early is key to preventing major failures. Remote monitoring systems excel at spotting subtle operational deviations, allowing quick intervention to prevent accidents. This continuous monitoring, powered by cloud technology, keeps equipment running safely at peak efficiency.

Optimizing Operational Efficiency

Remote monitoring transforms operational efficiency by providing a complete, real-time view of entire processes. Tracking key metrics lets operators identify bottlenecks, optimize resource use, and streamline workflows. This data-driven approach supports better decisions that boost productivity and cut costs.

Real-time data analysis enables immediate operational adjustments. This responsiveness is crucial in fast-changing environments. Data-driven tweaks help maintain optimal performance, reduce waste, and boost overall efficiency - all contributing to healthier bottom lines.

Enhanced Security Measures

When combined with strong security protocols, remote monitoring significantly boosts protection. Centralized data collection and analysis help detect potential security breaches like unauthorized access. This proactive approach enables quick response to potential threats.

Cloud-based monitoring platforms typically include advanced security features like multi-factor authentication and robust encryption. These measures protect sensitive data from unauthorized access - especially important in security-sensitive industries.

Predictive Maintenance Strategies

Remote monitoring enables predictive maintenance by analyzing historical data and real-time sensor readings to forecast potential equipment failures. This approach minimizes downtime and prevents unexpected breakdowns.

Cloud-powered predictive maintenance dramatically reduces reactive repairs, leading to substantial cost savings by avoiding emergency fixes. Anticipating problems before they occur is one of remote monitoring's greatest benefits.

Remote Access and Control

Cloud-based remote monitoring allows equipment management from anywhere with internet access. This capability is invaluable for operations spread across multiple locations, enabling quick response to issues in remote areas.

Remote troubleshooting capability reduces travel time and costs for technicians. This accessibility proves particularly valuable for critical infrastructure where quick response times are essential.

Reduced Operational Costs

Implementing remote monitoring can significantly lower operational expenses. Proactive maintenance, optimized resource use, and minimized downtime all contribute to savings. The long-term financial benefits of improved safety and reliability often justify the initial system investment.

Lower maintenance costs combined with reduced downtime translate to substantial savings over time. The ability to anticipate and prevent equipment failures delivers major cost reductions throughout equipment lifecycles. These savings, paired with operational efficiency gains, make cloud-based remote monitoring a wise investment for organizations of any size.

Scalability and Cost-Effectiveness for Autonomous Vehicle Development

Scalability in Autonomous Vehicle Development

Making autonomous vehicle (AV) technology scalable is essential for widespread use. This requires creating adaptable systems that work across different vehicles, environments, and applications. Modular design is key, allowing separate development and testing of components like sensors and decision algorithms. This modular approach simplifies updates and adaptations to new regulations or market needs, ensuring AV technology can evolve with the field. Scalable data infrastructure is equally important to support continuous AV system learning and improvement.

Standardizing development practices is another crucial scalability factor. Using consistent programming languages, data formats, and testing methods across projects reduces errors in future deployments. Robust data management systems are also essential to handle the enormous data volumes generated during AV testing and operation, supporting the analysis needed for ongoing development.

Cost-Effectiveness in Autonomous Vehicle Development

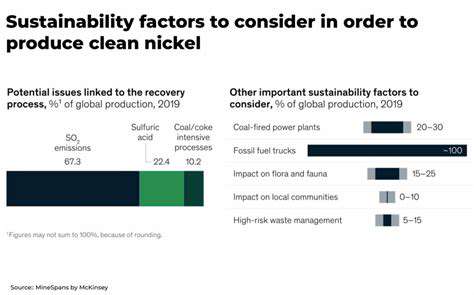

AV development requires massive investment, making cost-efficiency vital at every stage from R&D to deployment. This means optimizing vehicle design, using efficient manufacturing methods, and leveraging existing technologies where possible. Strategic partnerships between automakers, tech companies, and researchers can share costs and accelerate progress.

Data collection and processing costs also need optimization. Developing efficient data acquisition and storage strategies, using cloud resources wisely, and implementing streamlined analysis algorithms can significantly reduce AV development expenses, making the technology more affordable for broader adoption.

Data Management and Processing

Handling the enormous data volumes from autonomous vehicles is a critical development challenge. Strong data management systems must ensure quality, integrity, and accessibility while accommodating diverse data types from sensors to environmental information. These systems must support efficient storage, retrieval, and analysis to enable continuous AV system improvement.

Developing advanced data processing algorithms is essential to extract insights from AV datasets. These algorithms must handle real-time data streams, identify patterns, and make predictions. Effective data management and processing not only improve AV reliability and safety but also contribute significantly to overall cost-efficiency.

Ethical Considerations in Autonomous Vehicle Development

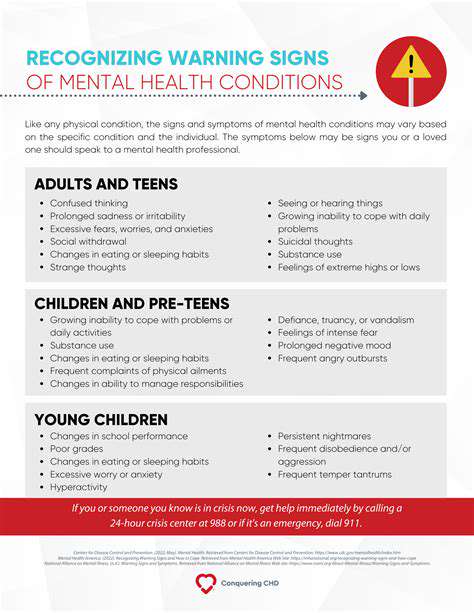

AV development involves serious ethical questions that must be addressed throughout the process. These include safety concerns, liability issues, decision-making protocols, and societal impacts. Thorough ethical analysis is necessary to ensure responsible development and deployment, with strong safety measures and ethical guidelines.

The ethics of autonomous decision-making in emergencies require particular attention. Clear protocols must address these scenarios, considering human safety priorities. Additionally, AVs' potential impacts on jobs, infrastructure, and the environment must be carefully evaluated throughout development.