Real-time Data Integration

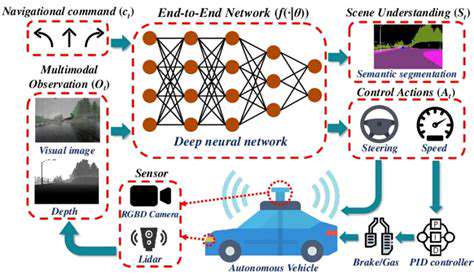

Multi-modal sensor fusion is a crucial technology for achieving real-time data integration from diverse sources. This integration allows for a more comprehensive understanding of the environment by combining information from different sensors, such as cameras, lidar, and inertial measurement units (IMUs). By combining data from these various sources, we can create a more accurate and detailed representation of the world around us, enabling more effective decision-making in applications like autonomous driving and robotics. The process involves extracting relevant features from each sensor modality and then combining them to create a unified representation.

A key aspect of this process is the synchronization of data from different sensors. Temporal discrepancies between sensor readings can significantly affect the accuracy and reliability of the fused data. Sophisticated algorithms are employed to precisely synchronize sensor data and ensure that the combined information reflects a consistent and accurate picture of the environment.

Enhanced Accuracy and Reliability

The combination of multiple data sources from various sensors leads to a substantial improvement in the overall accuracy and reliability of the system. By leveraging the strengths of different sensor modalities, multi-modal sensor fusion can overcome the limitations of individual sensors. For example, a camera might struggle in low-light conditions, while a lidar sensor might be unable to distinguish subtle textures or colors. Combining their data allows for a more robust and reliable understanding of the environment.

This enhanced accuracy is essential for applications requiring high precision, such as autonomous navigation systems. The ability to fuse data from multiple sensors reduces the likelihood of errors or misinterpretations, leading to a more predictable and safe outcome.

Applications in Autonomous Systems

Multi-modal sensor fusion is playing an increasingly important role in autonomous systems. By combining data from various sensors, autonomous vehicles can build a more comprehensive understanding of their surroundings, enabling safer and more efficient navigation. This includes detecting pedestrians, obstacles, and traffic signals with greater accuracy and reliability.

Furthermore, this technology enables robots to interact with the real world in a more intelligent and nuanced way. By combining visual, auditory, and tactile data, robots can perform tasks such as object recognition, manipulation, and environmental adaptation with greater dexterity and precision.

Challenges and Future Directions

Despite the significant advantages, multi-modal sensor fusion faces several challenges. One crucial aspect is the development of robust algorithms capable of handling noisy and inconsistent data from different sensors. Another key challenge is the computational cost associated with processing and integrating data from multiple sources.

Future research in this area will focus on developing more efficient algorithms and techniques for data fusion, as well as improving the accuracy and robustness of sensor systems. This will lead to more sophisticated and intelligent systems capable of performing complex tasks in dynamic and unpredictable environments, ultimately advancing the capabilities of autonomous systems and robots.

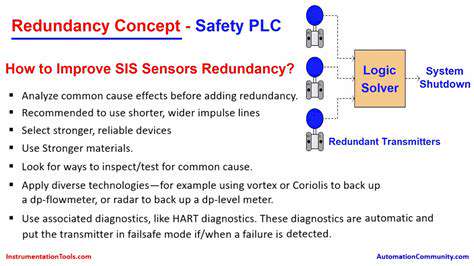

Redundancy Strategies for Enhanced Reliability

Redundant Sensor Systems for Enhanced Fault Tolerance

Redundant sensor systems are crucial for autonomous driving, ensuring robust perception and reliable decision-making. Employing multiple sensors of the same or different types allows for the detection and mitigation of sensor failures, crucial for safety and operational reliability in dynamic environments. This redundancy approach is a critical component in achieving robust and dependable autonomous driving capabilities in various challenging conditions, including adverse weather, obscured visibility, and complex traffic scenarios. Redundancy, in this context, provides a safety net, allowing the system to continue functioning even if one or more sensors fail or produce erroneous data. This is especially important in critical situations requiring immediate and precise responses. The core principle is to ensure that even with sensor failures, the system can still accurately perceive and interpret the environment.

Different sensor modalities, such as LiDAR, radar, and cameras, can be integrated redundantly. This heterogeneous redundancy provides a more comprehensive understanding of the surrounding environment, with each sensor potentially offering different strengths and weaknesses. For instance, LiDAR excels in precise distance measurements, while cameras provide rich visual information. Combining these strengths through redundant systems improves the robustness of the perception system, leading to more accurate and reliable data for the autonomous vehicle's decision-making processes. This combination offers a comprehensive data set, reducing the risk of missing critical environmental information, which is essential for the safe and reliable operation of the autonomous vehicle.

Data Fusion and Decision Making in Redundant Systems

A critical aspect of redundant sensor systems is the effective fusion and processing of the data from multiple sources. Sophisticated algorithms are employed to combine the information from different sensors, allowing the system to create a unified and consistent representation of the environment. This data fusion process is vital for error detection and correction, enhancing the accuracy and reliability of the perception system. Accurate data fusion is essential for the autonomous vehicle to make reliable decisions in complex driving scenarios. This process not only enhances the system's resilience to sensor failures but also improves the overall perception accuracy, allowing the vehicle to react appropriately and safely to changing conditions.

Robust decision-making algorithms are fundamental to utilize the redundant sensor data effectively. These algorithms must be designed to handle potential inconsistencies in the data from different sensors. They need to incorporate a mechanism for determining the reliability of each sensor's output, allowing for the prioritization of trustworthy data. This prioritization is crucial for ensuring that even in the presence of conflicting information, the autonomous vehicle maintains safe and reliable operation. The system should not only process the information but also weigh the reliability of each sensor's input when creating a unified and accurate representation of the surrounding environment.