Data Acquisition from Multiple Sources

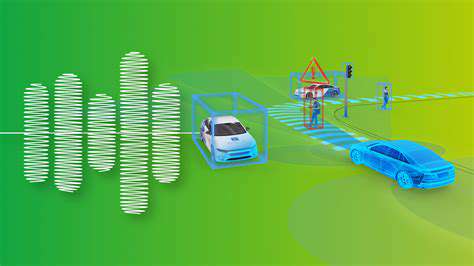

Sensor fusion is a powerful technique that combines data from various sensors to create a more comprehensive and reliable understanding of the environment. This integrated approach significantly improves the accuracy and robustness of applications compared to relying on a single sensor. Different types of sensors provide complementary information, like visual, auditory, and tactile inputs. Gathering data from multiple sources is crucial for creating a complete picture. This diverse data collection allows for a more nuanced interpretation of the world around us.

Each sensor type has its own strengths and weaknesses, and these differences are exploited to improve the overall system. For instance, cameras excel at capturing visual information, while accelerometers are adept at detecting movement. Combining these different capabilities lets us understand the environment in a richer, more detailed way.

Integrating Diverse Sensor Data

The key challenge in sensor fusion lies in integrating data from various sources. Different sensors often use different units of measurement and have varying levels of precision. Therefore, a robust data processing pipeline is essential for effectively combining the outputs of these disparate sources.

Data normalization and calibration play a crucial role in this process. These steps ensure that data from different sensors is comparable and can be used together without introducing biases. The data must be standardized to a common scale and format to allow for accurate integration.

Calibration and Data Normalization

Calibration is the process of adjusting sensor readings to account for inaccuracies and inconsistencies. This is vital for ensuring that the data from different sensors is comparable. For example, if one sensor consistently overestimates temperature readings by 2 degrees, calibration will correct this systematic error.

Data normalization, on the other hand, ensures that data from different sensors is expressed in a consistent format. This may involve converting sensor readings to a standardized scale, such as converting from Celsius to Fahrenheit. Proper calibration and normalization are fundamental to the success of any sensor fusion system.

Data Processing and Filtering

Raw sensor data is often noisy and contains irrelevant information. Data processing techniques, such as filtering and smoothing algorithms, are used to remove these unwanted elements. This process improves the quality of the data and reduces the amount of noise, making the data more reliable for analysis.

Filtering methods like Kalman filters are commonly used in sensor fusion applications. These filters effectively process sensor data to remove noise and estimate the underlying state of the system.

Sensor Fusion Algorithms

Different sensor fusion algorithms are employed to combine data from various sensors. These algorithms use mathematical models to integrate data and produce a coherent output. Each algorithm has strengths and weaknesses in different situations.

Some popular fusion algorithms include Kalman filter-based methods and Bayesian approaches. Choosing the right algorithm is crucial for achieving optimal results and depends on the specific application and the characteristics of the sensors being used.

Real-World Applications

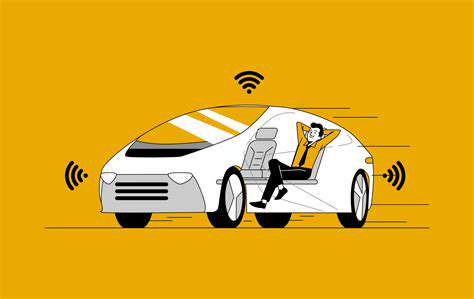

Sensor fusion finds numerous applications in various fields. In robotics, it's used for navigation, object recognition, and manipulation. In autonomous vehicles, it's crucial for perception, localization, and decision-making.

In healthcare, sensor fusion helps monitor patient vital signs and diagnose medical conditions with greater accuracy. These real-world applications highlight the importance of sensor fusion in improving our understanding of the world around us.

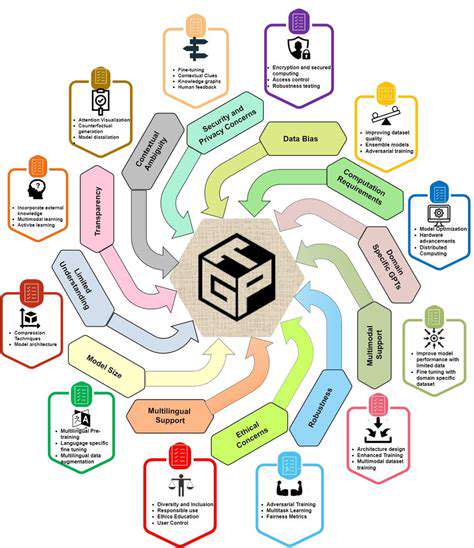

Challenges and Future Directions

Despite the many advantages, sensor fusion faces challenges like computational complexity and algorithm selection. The need for sophisticated algorithms and significant computing power makes these systems more complex.

Further research is focusing on developing more robust and efficient fusion algorithms to address these challenges. Advancements in machine learning and artificial intelligence are expected to play a significant role in pushing the boundaries of sensor fusion technology. This will pave the way for even more sophisticated and nuanced applications in the future.

Perception: Interpreting the Data

Understanding Sensory Input

Autonomous driving algorithms rely heavily on sensory input to perceive the environment. This input, originating from various sensors like cameras, radar, and lidar, provides a rich dataset of information about the surrounding world. Sophisticated algorithms then process this data to extract meaningful features, such as the location, size, and speed of other vehicles, pedestrians, and obstacles. Effective interpretation of this data is crucial for safe and reliable navigation.

The quality and quantity of sensory data directly impact the accuracy and reliability of the autonomous driving system. Factors such as weather conditions, lighting, and the presence of occlusions can significantly affect the clarity and completeness of the perceived information. Robust algorithms must be able to adapt to these variations and uncertainties to ensure consistent and accurate data processing.

Object Recognition and Classification

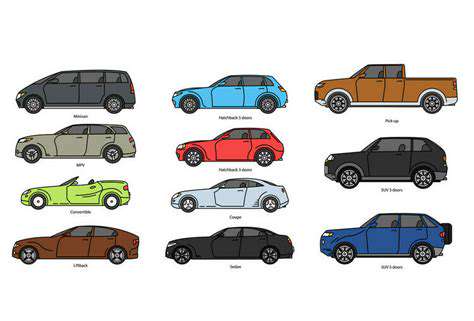

A key aspect of perception is the ability to recognize and classify objects. Algorithms must distinguish between different types of vehicles, pedestrians, cyclists, and other potential hazards. This process involves complex image processing techniques and machine learning models trained on vast datasets. Sophisticated models can even recognize subtle variations in object characteristics, like different types of vehicles or the posture of pedestrians.

Accurate object recognition is essential for safe and efficient navigation. Correctly identifying and classifying objects allows the autonomous vehicle to make appropriate decisions, such as maintaining safe distances, avoiding collisions, and following traffic rules.

Spatial Mapping and Localization

Autonomous vehicles need to understand their position and orientation within the environment. This involves constructing a spatial map of the surrounding area and localizing the vehicle's position on that map. Sophisticated algorithms process sensor data to build a comprehensive representation of the road network, including lane markings, traffic signals, and other relevant landmarks.

Precise localization is critical for safe navigation and accurate path planning. The vehicle needs to know its exact location at any given moment to make informed decisions about its trajectory and avoid collisions with other objects.

Traffic Sign and Signal Recognition

Autonomous vehicles must be able to identify and interpret traffic signs and signals to ensure compliance with traffic regulations. This involves advanced image processing techniques that can recognize various shapes, colors, and symbols present on traffic signs. Recognizing traffic signals is equally important for understanding the current traffic conditions and making appropriate decisions.

Predictive Modeling of Behavior

Autonomous driving algorithms go beyond simply recognizing static objects. They also need to predict the behavior of other road users. By analyzing past patterns and current sensor data, algorithms can anticipate the movements of pedestrians, cyclists, and other vehicles. This predictive capability enables the vehicle to anticipate potential hazards and react proactively.

Predictive modeling is a crucial component of advanced driver-assistance systems and autonomous driving. Accurately predicting the behavior of other road users allows the autonomous vehicle to make more informed decisions and respond to changing traffic conditions in a timely and safe manner.

Environmental Context Understanding

Autonomous vehicles need to understand the broader environmental context, including weather conditions, road conditions, and potential hazards. This understanding allows the vehicle to adjust its driving behavior accordingly. For example, in adverse weather conditions, the vehicle might need to reduce its speed or adjust its braking strategy to maintain safety.

The ability to understand and react to environmental context is essential for the robustness and reliability of autonomous driving systems. This allows the vehicle to adapt to different situations and maintain safe operation in various real-world scenarios.

Spaceflight significantly impacts the cardiovascular system, leading to a variety of physiological changes. Astronauts experience a reduction in blood volume and a redistribution of body fluids, which can affect blood pressure regulation. These changes are thought to be a combination of reduced gravity and the associated changes in fluid balance within the body. Understanding these adaptations is crucial for developing countermeasures to mitigate risks associated with long-duration space missions and ensuring the well-being of astronauts.