Understanding the Foundation of Sensor Fusion

Sensor fusion represents the bedrock of contemporary data collection and analysis, merging inputs from diverse sensors to construct a more holistic and precise representation of a system or environment. This methodology transcends mere data aggregation, employing advanced algorithms to synthesize information from multiple sources. Such integration proves indispensable for creating adaptable systems capable of navigating dynamic scenarios while delivering meaningful insights.

Consider autonomous vehicles, which depend on an array of sensors—cameras, radar, lidar—to interpret their surroundings. Through sensor fusion, these vehicles amalgamate disparate data streams into a unified perception of road conditions, pedestrians, and other vehicles, facilitating intelligent navigation decisions.

Types of Sensors and Data Integration

Modern systems incorporate numerous sensor types, each offering unique perspectives: accelerometers, gyroscopes, GPS units, cameras, microphones, and pressure sensors among them. The fusion process necessitates converting raw data into compatible formats while employing sophisticated algorithms—often machine learning-based—to reconcile discrepancies. Effective integration demands meticulous preprocessing to align varying data formats and sampling rates.

Different sensors inherently capture information in distinct formats and at inconsistent intervals. Successful fusion requires rigorous normalization techniques to ensure data compatibility and accuracy across all inputs.

Challenges in Sensor Fusion

While offering substantial benefits, sensor fusion presents notable obstacles. Data inconsistency emerges as a primary concern, particularly when sensors deliver conflicting or incomplete readings. The complexity of real-time data processing algorithms poses another significant challenge, requiring robust solutions capable of handling continuous information streams. Additionally, the substantial computational resources and costs associated with advanced fusion systems may limit their adoption in certain applications.

Applications of Sensor Fusion in AI

This technology finds extensive application across artificial intelligence domains. Robotics leverage sensor fusion for precise environmental navigation and task execution, while autonomous vehicles rely on it for accurate perception and decision-making. Healthcare applications include comprehensive patient monitoring, and industrial settings utilize it for enhanced process control. The capacity to integrate multi-sensor data fundamentally expands AI systems' perceptual capabilities, enabling more nuanced understanding and informed decision-making.

Accuracy and Reliability in Sensor Fusion

Maintaining precision in fused systems remains paramount, as individual sensor errors can propagate through the integration process. Rigorous calibration and validation protocols help minimize such inaccuracies. Continuous performance evaluation identifies and corrects systematic biases, while advanced noise-handling techniques ensure data consistency. These measures collectively enhance the reliability of AI-driven decisions based on fused sensor data.

The Future of Sensor Fusion and AI

Ongoing advancements in sensor technology and AI algorithms continue pushing the boundaries of data fusion capabilities. Emerging techniques promise more sophisticated integration of diverse sensor inputs, yielding increasingly accurate environmental interpretations. This progress will unlock novel AI applications across sectors including healthcare, manufacturing, transportation, and environmental monitoring, cementing sensor fusion's role as a critical enabler of technological innovation.

Ethical Considerations and Decision-Making Frameworks

Ethical Implications of AI in Decision-Making

Artificial intelligence systems involved in consequential decisions raise profound ethical questions. Algorithmic biases, often reflecting historical prejudices in training data, may perpetuate discrimination—for instance, in loan approval systems favoring certain demographics. The opacity of some AI decision processes compounds these concerns, creating accountability challenges in critical domains like healthcare and criminal justice.

The Importance of Fairness and Equity in AI Systems

Equitable AI development demands proactive bias identification and mitigation throughout system design and deployment. Continuous fairness monitoring ensures technologies promote inclusive outcomes rather than exacerbating existing disparities. Achieving this requires multidisciplinary collaboration among technologists, policymakers, and ethicists to establish frameworks prioritizing accessibility and justice.

Transparency and Explainability in AI Decision-Making

Building trust in AI systems necessitates understandable decision rationales, particularly for high-impact applications. Explainable AI (XAI) research develops methods to visualize and interpret algorithmic processes, enabling meaningful human oversight. While creating transparent systems presents technical challenges, progress in interpretability techniques fosters accountability and responsible AI adoption.

Decision-Making Frameworks for Addressing AI Ethics

Effective ethical frameworks emphasize human oversight, value alignment, and iterative evaluation. These guidelines should address potential harms, privacy concerns, and security requirements while ensuring AI applications align with societal values. Developing comprehensive ethical standards remains crucial for responsible AI deployment across industries.

Accountability and Responsibility in the AI Era

Determining liability for AI-driven decisions requires clear legal and ethical frameworks. Establishing transparent redress mechanisms builds public trust while ensuring accountability among developers, deployers, and users. Ongoing stakeholder dialogue must address evolving challenges in AI governance and oversight.

Challenges and Future Directions

Data Acquisition and Annotation

Developing autonomous systems demands vast, accurately labeled datasets—a resource-intensive process prone to human error. Training AI models requires meticulous data curation to prevent biased learning outcomes. Automated annotation tools show promise in addressing these challenges while maintaining data quality.

Robustness and Reliability

Real-world operation demands systems capable of handling unpredictable conditions—from sensor noise to dynamic obstacles. Developing adaptive algorithms remains critical for ensuring consistent performance across diverse environments and use cases.

Ethical Considerations

As AI assumes greater autonomy, addressing accountability, fairness and transparency becomes imperative. Establishing clear ethical guidelines helps navigate dilemmas surrounding system errors and decision-making processes while maintaining public trust.

Computational Resources and Energy Efficiency

The substantial energy demands of complex AI systems necessitate optimization strategies. Innovations in hardware architecture and algorithmic efficiency promise more sustainable implementations without compromising performance.

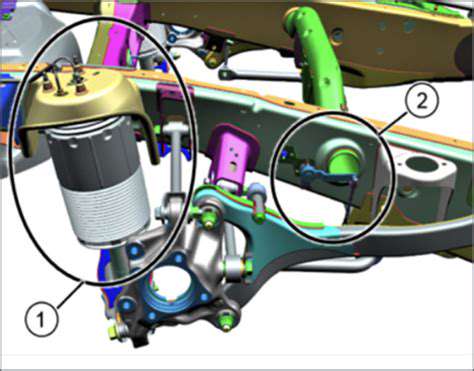

Integration with Existing Infrastructure

Successful deployment requires seamless interoperability with current systems. Standardized communication protocols and interface designs facilitate smooth integration across transportation networks, manufacturing processes, and other complex environments.

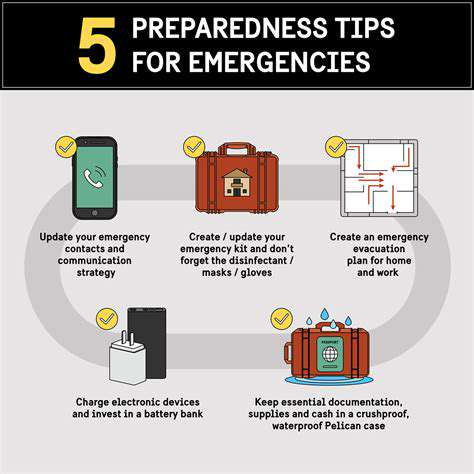

Safety and Security

Protecting autonomous systems from malicious interference demands robust cybersecurity measures. Implementing comprehensive safety protocols, including fail-safe mechanisms and continuous monitoring, ensures reliable operation while mitigating potential risks.

Human-Machine Collaboration

Future systems will likely emphasize synergistic human-AI interaction. Developing intuitive interfaces and effective training protocols enables productive collaboration while maintaining appropriate human oversight in critical applications.