Different SLAM Approaches

Iterative Closest Point (ICP)

ICP is a widely used method in SLAM for registering point clouds from consecutive frames. It iteratively refines the transformation between these point clouds by minimizing the distance between corresponding points. This process involves finding the best rigid transformation (rotation and translation) that aligns the two point clouds, typically using a least squares approach. ICP is particularly effective for registering point clouds that are relatively close and have a high degree of overlap. However, its performance can degrade in scenarios with significant occlusions or large motion between frames, potentially leading to drift in the estimated trajectory.

One key aspect of ICP is its reliance on a good initial guess for the transformation. A poor initial guess can lead to the algorithm converging to a local minimum instead of the global optimum. Robust initialization techniques are often necessary to ensure accurate and reliable registration.

Simultaneous Localization and Mapping (SLAM)

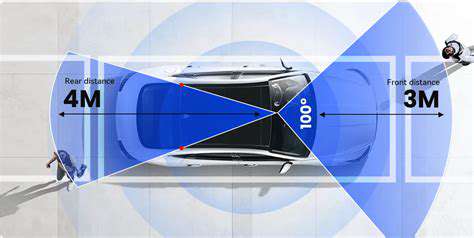

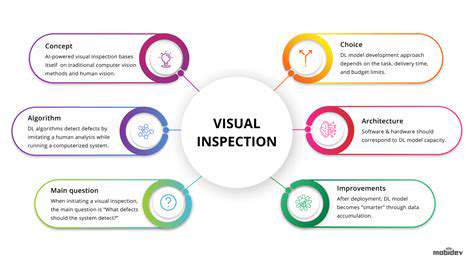

Simultaneous Localization and Mapping (SLAM) is a crucial component of autonomous robotics. It allows robots to build a map of their environment while simultaneously determining their own location within that map. This process often involves complex algorithms that combine sensor data, like laser scans or cameras, with sophisticated mathematical models to estimate both the map and the robot's pose. The fundamental challenge is balancing the computational cost of map building and localization with the accuracy and robustness of the final result.

Feature-Based SLAM

Feature-based SLAM approaches rely on identifying distinctive features in the environment, such as corners, lines, or points. These features are tracked and matched across consecutive sensor readings. This approach often yields accurate results in environments with sufficient distinctive features. However, environments with homogenous or cluttered areas might pose challenges for feature extraction and matching, potentially impacting the accuracy of the map and localization.

Robust feature detection and matching algorithms are critical for successful feature-based SLAM. Different feature types and detectors, such as SIFT or SURF, can be employed to handle various environmental characteristics.

Visual SLAM

Visual SLAM leverages computer vision techniques to estimate the robot's pose and build a map. It utilizes cameras to capture images of the environment, extracting features like corners or edges to track and build the map. This approach has gained significant attention due to the availability of affordable and high-resolution cameras. However, visual SLAM can be susceptible to lighting conditions, occlusions, and variations in scene appearance.

Robust visual SLAM algorithms need to handle these issues effectively. Techniques like robust feature descriptors, efficient matching algorithms, and sophisticated motion models are vital for accurate and reliable results.

Probabilistic SLAM

Probabilistic SLAM methods employ Bayesian inference to estimate the robot's location and the map. They model the uncertainty associated with sensor measurements and the robot's motion. This approach provides a comprehensive framework for dealing with noise and inaccuracies in sensor data, leading to more robust and reliable maps. However, probabilistic SLAM algorithms can be computationally intensive, especially for large-scale environments.

Efficient sampling techniques and sophisticated optimization methods are crucial for managing the computational demands of probabilistic SLAM, enabling their application to complex scenarios.

Hybrid SLAM

Hybrid SLAM approaches combine different SLAM techniques to leverage their respective strengths. For example, a system might use visual SLAM for initial localization and mapping in outdoor environments, then switch to a more detailed feature-based SLAM approach for indoor navigation. This approach aims to achieve a balance between robustness, accuracy, and computational efficiency. Hybrid SLAM can be tailored to specific application requirements and environmental characteristics.

Designing a robust and efficient hybrid system requires careful consideration of the different SLAM approaches and their performance characteristics in various scenarios. Integration of sensor data from different modalities, like cameras and laser scanners, plays a crucial role.